One of the many advantages of being a business partner from IBM is we get regularly invited to some workshops were IBM shares their vision and insight on there product portfolio. Last week I was able to attend the GSI Lab workshop “IBM Hybrid Cloud Platform 2022 Update” in the IBM Systems Centre of Montpellier.

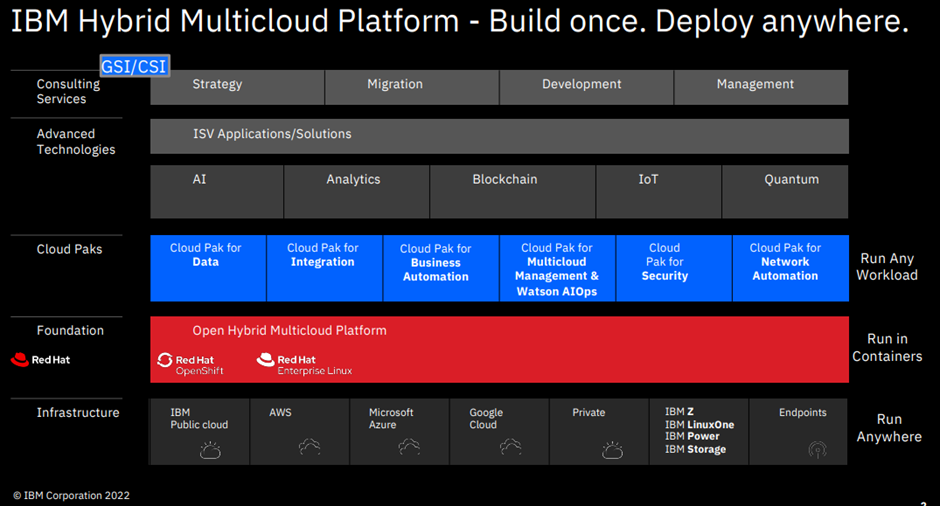

My key takeaway I got from this event was that IBM really has succeeded in implementing its vision on a hybrid cloud. The “Build once. Deploy anywhere”.

Multi Cloud

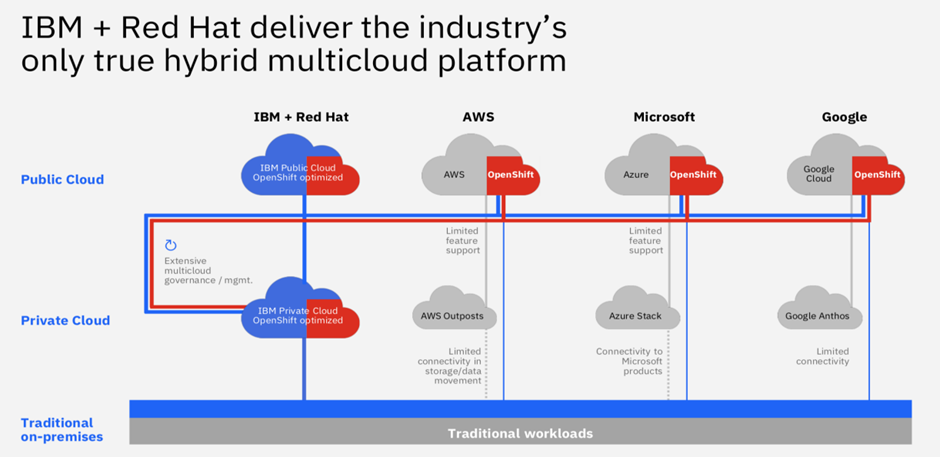

We can look at the Hybrid model on different level. Most of us when we hear the hybrid cloud are thinking about multiple cloud providers and on-premises server installation. IBM has seen the trend of enterprises moving their workload to the cloud. But they also now see that enterprises are moving some workloads back to their on premise infrastructure because of various reasons: cost (running a workload in the cloud is typically 2 or 3 times as expensive as running it on premise), regulations, access to data, …

With the acquisition of Red Hat, IBM is now able to run the same software stack on every cloud provider (public or private) or on-premises infrastructure.

Multi platform

A second part of the hybrid model is the infrastructure running the software.

I was a bit surprised when they were talking about the mainframe. To be honest I always thought the mainframe was a bit outdated and more of a necessity than a real advantage. On a modern IT architecture, it was always positioned somewhere in the corner of a slide. It was placed outside of the hybrid model. Agility and mainframe seemed like fire and water.

Boy was I wrong. They are now able to run an OpenShift Cluster on the mainframe. IBM also has made some significant improvements in the capabilities to integrate the mainframe into CI/CD pipelines. They have created some tools that can expose some libraries for python, java, cli to manage the mainframe. Creating a dataset for example can now be scripted in a shell script or python script. You no longer need to go over 20 different screens to create this.

They also now can run OpenShift clusters on Power System. If you then think about combining technologies like RedHat Advanced Cluster Management and the docker manifest file for multi architecture dockers we are now able to run our code everywhere. If you need to deploy an application (running in containers) that has lots of communication with a CICS system, you can deploy it on a cluster on a zOS with a click on a button. The same containers can be deployed to a Power System or your favourite cloud provider. One thing that stills needs some attention is that you need to build your containers for the different architectures. So, the portability of the code remains an attention point.

Cloud Pak’s

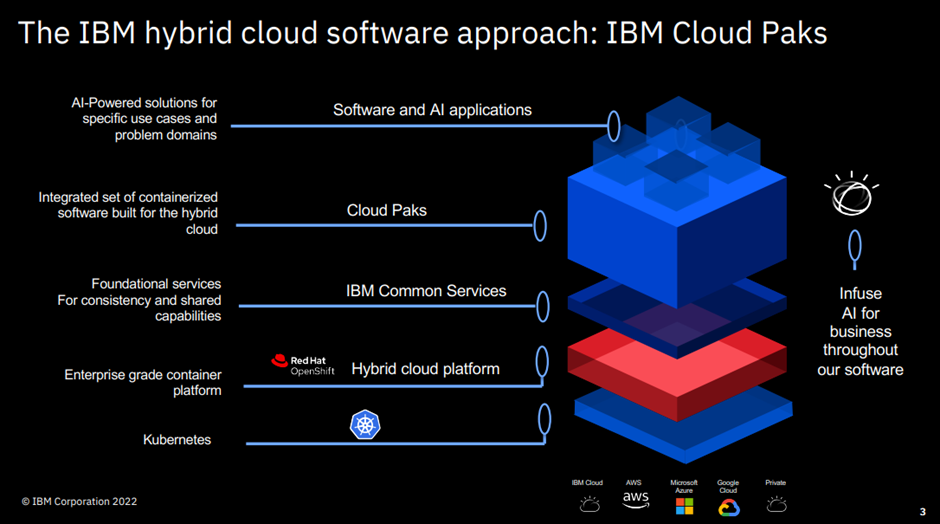

What I like about IBM is that they don’t just acquire other companies and resell their software, but they have a vision of what they want to do with it. With Red Hat OpenShift they can run their software everywhere. This would be already a very good selling point, but they didn’t stop there. Like most of you would know they have created some cloud paks. But they just didn’t bundle some software together. They have created an additional layer on top of OpenShift that contains some foundational or common services that all the paks can use. This allows them to further enhance their middleware software. For example, with AI capabilities. I know it is a buzzword and most technical people will be sceptical about it.

But let’s look at our favourite IBM Cloud PAK: IBM Cloud Pak for Integration (CP4I). They really succeeded in leveraging the capabilities of AI to make the life of the developer easier. Testing of API’s for example. Everybody agrees it is important to do but nobody wants to do it. Mainly because it is time consuming and labour intensive. In the new version of CP4I you can press the insight button in the test client and CP4I will use its historical data to generate a list of testcase. You can then select the testcase you want an add them to your test suite. Will it be perfect? Off course not. Will it be a good starting point? Yes! Similar they have some AI capabilities to create mappings.

Sometimes we have discussions with our clients. They think IBM is expensive and if they choose for it, they will end up with a vendor lock in. Why wouldn’t they use the integration capabilities of their favourite cloud platform which seems much cheaper or sometimes even free?

The problem I have with these tools is that they are[JJ1] very newly developed products and can only be run in their cloud. So instead of a software vendor lock in you are now committing yourself to a cloud provider with a new product. How will the pricing be in a few years when you have migrated all the workloads to that cloud? How will you do on premise integration? Will you need a different skill set to do cloud integration and on-premise integration? What if they decide to discontinue the product? What are the features of this product?

If we look at CP4I we see that it is a combination of different middleware products that are all in Gartner’s market leader’s quadrant. Most of them are already on the market for some decades and they always follow the market trends and new integration needs. I have a hard time imagining an integration use case that can’t be easily solved with CP4I: file based, rest based, messaging, event streaming, … are all full supported with market leader products.

Do I say that you never should use these integration cloud services? Off course not. If you have some integration to do in the cloud it can be perfectly fine to implement this in those services. But I would be very sceptical for using these for your business-critical integrations.

Another question we sometimes get is how will the products (App connect enterprise, API Connect, IBM MQ…) and CP4I evolve? The statement from IBM is that the products will remain to exist. The development will focus around CP4I and afterwards the features will be included in the standalone products if this is possible. If they need some part of the Cloud PAK foundational services (e.g. AI) they will not be able to add it to the standalone product.

Other

The workshop was really packed with lots of exciting new ideas and use cases. I only picked some parts of it to discuss in this blog. But I am also excited about some other topics.

- IBM Satellite: With the IBM satellite you can run some IBM cloud services on your own infrastructure, egde servers and even other cloud providers (AWS, Google, Azure, …). For example, you can create an IBM managed Red Hat OpenShift Cluster in your Azure cloud.

- Red Hat Advanced Cluster Management: A Red Hat solution to manage all your OpenShift Clusters. This reminded me of the Cloud PAK for Multi cloud management. IBM saw that Red Hat had a better solution and they now work together on this product. I saw some impressive demo’s where they deployed the same application to both an X86 infrastructure and a Power cluster. They also mentioned submarine. This is an opensource project that allows different clusters to connect their network. I find this exciting in our hybrid world.

Conclusion

I was really impressed with what they have done. For me, the main problem is that IBM sometimes has an image problem. I think they can compete on agility with the latest trendy opensource tools, but they do it with all the capabilities needed to run enterprise ready code. (Security, DR, logging, maintenance, continuity, …)

IBM Integration Specialists

Enabling Digital Transformations.

Recent news

Let's get in touch...

Find us here

Find us here

Veldkant 33B

2550 Kontich

Belgium

Pedro de Medinalaan 81

1086XP Amsterdam

The Netherlands

![]() © 2019 Integration Designers - Privacy policy - Part of Cronos Group & integr8 consulting

© 2019 Integration Designers - Privacy policy - Part of Cronos Group & integr8 consulting